Kafka : A quick view

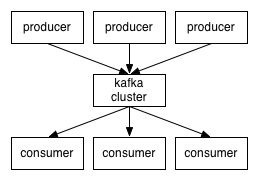

Kafka is a distributed, partitioned, replicated commit log service. It provides the functionality of a messaging system, but with a unique design.

A little vocabulary :

- A producer is a process that publish messages to a Kafka topic

- A consumer is a process that subscribe to topics and process the feed of published messages

- A Kafka cluster is comprised of one or more servers, each of which is called a broker.

- A topic is a category or feed name to which messages are published. For each topic, the Kafka cluster maintains a partitioned log with one or more partition.

- The partitions of the log are distributed over the servers in the Kafka cluster (Kafka uses ZooKeeper to manage partition) with each server handling data and requests for a share of the partitions. Each partition is replicated across a configurable number of servers for fault tolerance

Start Kafka as a service (within initctl)

Creating the following 2 configuration files :

- etc/init/kakfa.conf with:

description "Kafka Broker"

limit nofile 32768 32768

start on runlevel [2345]

stop on [!12345]

respawn

respawn limit 2 5

env CONFIG_HOME=/etc/kafka

env KAFKA_HOME=/usr/lib/kafka

umask 007

kill timeout 300

setuid kafka

setgid kafka

script

. /etc/default/kafka-broker

if [ "x$ENABLE" = "xyes" ]; then

exec $KAFKA_HOME/bin/kafka-server-start.sh $CONFIG_HOME/server.properties

fi

end script

- etc/init/kafka.zookeeper.conf with

description "Kafka Broker"

start on runlevel [2345]

stop on starting rc RUNLEVEL=[016]

respawn

respawn limit 2 5

env KAFKA_HOME=/usr/lib/kafka

env CONFIG_HOME=/etc/kafka

umask 007

kill timeout 300

setuid kafka

setgid kafka

exec $KAFKA_HOME/bin/zookeeper-server-start.sh $HOME/zookeeper.properties

These folder may change depending on the installation mode.

Then start the services in the following order :

sudo initctl start kafka.zookeeper

sudo initctl start kafka

OS change

This is well described in documentation

To check maximum number of fd in system type ‘cat /proc/sys/fs/file-max’

jabberwock@cassiopee:~$ cat /proc/sys/fs/file-max

1631132

Kafka in docker

A very good tutorial on wurstmesiter github page

Kafka offset commands

Offset for topic

This command prints the offset by partition for a given topic.

./bin/kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list hostname:port--topic topicName--time -1

Export Offset

This command retrieves the offsets of broker partitions in ZK and prints to an output file.

./bin/kafka-run-class.sh kafka.tools.ExportZkOffsets --zkconnect hostname:port --group consumerGroup --output-file ~/output.txt

Import Offset

This command imports offsets for a topic partitions. File format is the same as the export. Be careful, this command affects consumer load (re-processing of multiple messages). All consumers must be stopped before changing the offset.

./bin/kafka-run-class.sh kafka.tools.ImportZkOffsets --zkconnect hostname:port --input-file ~/output.txt

Get the lag

This commands allow to print the consumer offset, log size,and consumer lag of the named topic, this information are in bytes.

The lag is the difference between log size and offset :

Lag = log size - offset

bin/kafka-run-class.sh kafka.tools.ConsumerOffsetChecker --zkconnect hostname:port --group consumerGroup --topic topic1

You can also monitor the MaxLag and the MinFetch jmx bean (see http://kafka.apache.org/documentation.html#monitoring).

Delete a topic

Deleting topic isn’t always working in 0.8.1.1. It should be working in the next release, 0.8.2. See here and KAFKA-1397

But there is a tricky way to do that :

- Stop the brokers.

- Delete the partition folders for this topic on all brokers.

- Delete the topic-partition znode in ZK under the /brokers path.

- Restart the brokers.